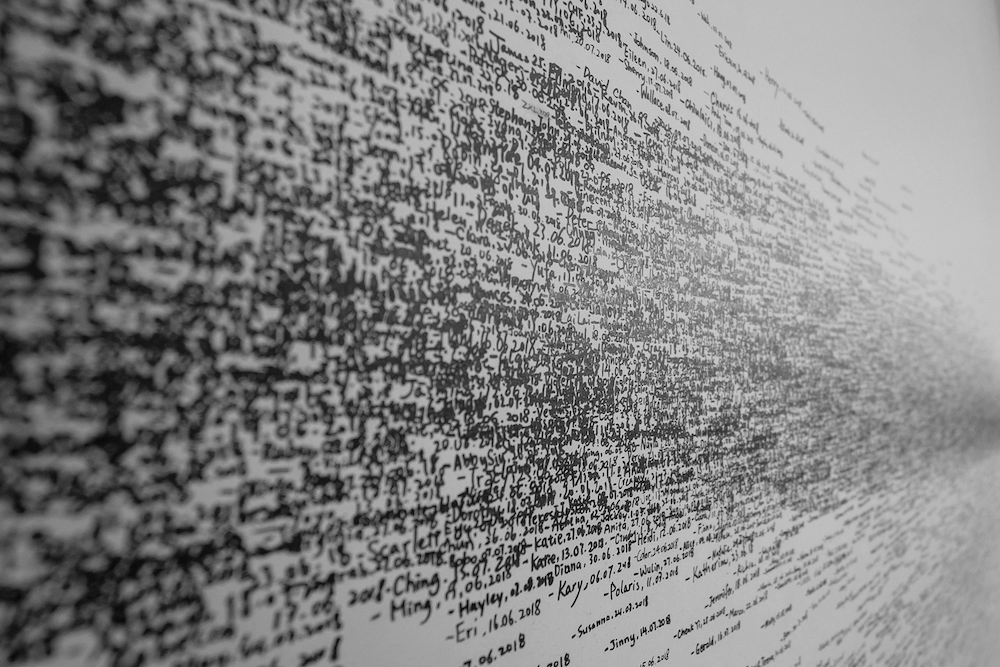

Until conversational AI can keep pace with the breadth and spontaneity of human conversation, it will fail to create the human-like connection and companionship users now crave from voice-enabled devices. By developing market-changing open domain conversational question answering capability, we will give the Alana-powered devices of the future the capability to chat freely and informatively on any topic a user chooses. Let us explain.

Today’s data-based chatbots achieve superficially impressive results when interacting in their comfort zone (or domain). Our daily interactions with more and more voice agents are starting to feel convincingly ‘real’ and deliver satisfactory responses. But, it only takes a device to utter – “can you repeat that? I didn’t understand the question” – to shatter the illusion, reminding the user they’re in fact talking to a computer. Cue brakes slammed on, conversation stalls, and the human user retreats disillusioned and cursing technology for its ineptitude.

The restricted scope of question answering is one of the most significant limitations in existing conversational technology. It’s something Alana is investing heavily in changing, by developing market-changing open domain conversational question answering capability.

What is open domain conversational question answering?

Currently, most AI agents tend to be trained to respond within a defined topic area. And they will perform competently as long as you don’t ask questions that stray out of their programmed field.

Open domain conversational question answering addresses this limitation by lifting the restrictions around the topics that a conversational AI agent can process and answer. In other words, it’s about developing the techniques and approaches that allow virtual agents to engage with humans on any subject. This allows more wide-ranging, freeform conversations. Let’s break this down.

Open domain vs closed domain

Open domain and closed domain are two different approaches to building a conversational AI platform. When it comes to which one you choose, it all boils down to the scope, depth and ambiguity of the conversations you expect your system to work with.

- Closed domain limits conversation to specified topics, giving chat a neatly defined scope and set objectives

A closed domain system is built to understand certain defined lines of questioning. This type of AI agent is trained to focus on a limited set of topics, and this means it can provide pre-programmed responses only in these areas. Stray off topic and expect a canned, “I don’t understand” response.

While closed domain can work when you’re ordering a pizza or making a reservation, not all conversations follow this pre-predetermined style of one-to-one question and answer exchanges. Therefore, a common complaint aimed at run-of-the-mill chatbots is that they’re domain specific.

- Open domain liberates conversational AI to cover more topics, allowing conversation to flow more freely

With open domain, conversation can go in more directions. Virtual agents built in this way can hold broader, longer, more factually relevant conversations. With chat able to wander between a multitude of topics, interactions become more satisfying and feel more free-flowing and engaging. Alana is developed to simulate this more human-like conversational style.

The science of conversational question answering

At a user level, it all seems so simple. You ask a question, your device understands what you want and gives you the answer you’re looking for. But how different platforms source and retrieve information, and then formulate answers can be very different.

In simple terms, most run-of-the-mill chatbots can be described as ‘single-shot’. In short, they’re programmed to answer a defined set of questions and these queries must be carefully worded. These platforms can’t read context.

In contrast, a more advanced conversational system, like Alana, allows for more flexibility in how questions are phrased. For example, it can answer questions in more depth by picking up the context from dialogue.

Take this example…

Imagine you’re interested in the population of the neighbourhood of Blackhall in Edinburgh. As there are other areas in the UK called ‘Blackhall’ to get the appropriate answer from a standard chatbot you would need to ask specifically – “What is the population of Blackhall in Edinburgh in Scotland.”

However, Alana can read context and clarify ambiguities in a more casually phrased question. For example:

User: What is the population of Blackhall?

Alana: You mean Blackhall in London, or Blackhall in Edinburgh?

User: In Edinburgh

Alana: [Gives response]

This also allows Alana to hold longer conversations that delve deeper into a question without the constant need to reiterate context and repeat specific wording. For example:

User: How is coconut milk made?

Alana: It’s obtained by pressing the grated coconut meat, usually with hot water added which extracts the coconut oil, proteins, and aromatic compounds.

User: What recipes do we use it in?

Alana: Coconut milk is used in various recipes ranging from savoury dishes – such as “rendang”, “soto”, “gulai”, “mie celer”, “sayur lodeh”, “gudeg”, and “opor ayam” – to sweet desserts.

How do virtual agents source information

Behind the scenes, there are different ways AI agents process user queries and find information to formulate a response (known as ‘retriever’ models). This can dramatically alter the scope and sophistication of question answering ability.

Most run-of-the-mill chatbots are what we term ‘FAQ all-rounders’ – they source answers by identifying keywords in a query, finding the closest matching question and answer pair from a bank of FAQs and replying with a human-authored answer. Pairing a user query to a pre-assembled response in this way can be highly effective if conversations are closed domain and limited in scope. However, in an open domain scenario, it’s impossible to create an endless list of prepared answers for the system to recite. Cue system failure.

As conversational AI has developed, two alternative methods have emerged to overcome the limitations of ‘FAQ all rounders’.

- The ‘Wikipedia’ method: System searches a fixed, large database of documents (e.g. the whole of Wikipedia) for the most relevant passages and entries to the user query.

- The ‘Search Engine’ method: System issues the query to an external search engine (such as Google, or Bing) and uses the top ranking snippets from a search.

Neither approach is without flaws. In fact, we could write a separate article discussing the advantages and disadvantages of each of these methods. But given the potential issues with the credibility (and safety) of the sources that are retrieved from more open search engines, Alana favours the ‘Wikipedia’ approach.

If the answer isn’t pre-assembled, how does a search result turn into a reply?

There are two approaches to generating answers – extractive and abstractive answering.

The most simple of the two is ‘extractive’. This is where a phrase is ‘copied and pasted’ from one or more of the sources retrieved and read out verbatim as the response. The problem is reading out a featured snippet from a Google search (or similar) might be vaguely relevant but is unlikely to directly answer the user query.

More advanced systems (like Alana) are trained in ‘abstractive’ answering. This means Alana reads the results retrieved from a search, and uses this to formulate a response. This might mean paraphrasing or maybe combining several sources to answer a query more directly.

The challenge of factuality

With the rise of abstractive answering and more wide-ranging retriever methods, open domain conversational question answering is progressing quickly. However, there remain challenges for the industry to overcome. Most of these challenges stem from the risk that open domain systems can confidently respond with knowledge that isn’t correct. Because the response is fluent and eloquent, users can be easily convinced and misled by these hallucinated answers. There are many scenarios this can happen in, for example:

- The virtual agent innocently constructs an inaccurate response by wrongly piecing together information from various sources.

- The system fails to detect ambiguity in a question, resulting in a grounding failure that leads to the wrong or inappropriate information being retrieved or generated.

- The answer is generated without consideration for the nuances or context of the conversation or the personal biases of the person asking the question.

- Where conversational AI is asked to answer questions on sensitive or controversial matters.

- Responses can appear inconsistent, lack common sense or generally seem at odds with general knowledge.

Sometimes an inappropriate answer is nothing more than a slight annoyance to the user (e.g. it mixes up two people with the same name). But an AI system that hallucinates something more damaging (e.g. provides responses that promote fake news, conspiracy theories or extremist or discriminative views) has ethical consequences and the potential to cause real-world harm.

Now and next

The majority of conversational models remain closed domain. This is because, for the reasons we outline above, open-domain conversation question answering is incredibly complex and difficult to implement. At Alana, we take the safety and ethics of conversational AI very seriously, investing considerable research into tackling the challenges above to evolve an approach to open domain conversational question answering that creates a satisfying user experience and responses our customers can trust.